Capturing Hand Motion with an RGB-D Sensor, Fusing a Generative Model with Salient Points

Dimitrios Tzionas, Abhilash Srikantha, Pablo Aponte, and Juergen Gall

Abstract

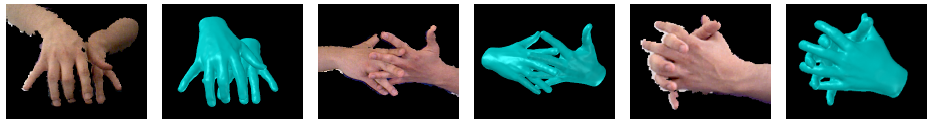

Hand motion capture has been an active research topic, following the success of full-body pose tracking. Despite similarities, hand tracking proves to be more challenging, characterized by a higher dimensionality, severe occlusions and self-similarity between fingers. For this reason, most approaches rely on strong assumptions, like hands in isolation or expensive multi-camera systems, that limit practical use. In this work, we propose a framework for hand tracking that can capture the motion of two interacting hands using only a single, inexpensive RGB-D camera. Our approach combines a generative model with collision detection and discriminatively learned salient points. We quantitatively evaluate our approach on 14 new sequences with challenging interactions.

Images/Video

Video (YouTube)

Data

If you have questions concerning the data, please contact Dimitrios Tzionas.

Publications

Tzionas D., Ballan L., Srikantha A., Aponte P., Pollefeys M., and Gall J., Capturing Hands in Action using Discriminative Salient Points and Physics Simulation (PDF), International Journal of Computer Vision, Special Issue on Human Activity Understanding from 2D and 3D data, Vol 118(2), 172-193, Springer, 2016. ©Springer-Verlag

Tzionas D., Srikantha A., Aponte P. and Gall J., Capturing Hand Motion with an RGB-D Sensor, Fusing a Generative Model with Salient Points (PDF), German Conference on Pattern Recognition (GCPR'14), Springer, LNCS 8753, 277-289, 2014. ©Springer-Verlag

Supplementary Material: Capturing Hand Motion with an RGB-D Sensor, Fusing a Generative Model with Salient Points (PDF).

Ballan L., Taneja A., Gall J., van Gool L., and Pollefeys M., Motion Capture of Hands in Action using Discriminative Salient Points (PDF), European Conference on Computer Vision (ECCV'12), Springer, LNCS 7577, 640-653, 2012. ©Springer-Verlag