Functional Categorization of Objects using Real-time Markerless Motion Capture

Juergen Gall, Andrea Fossati, and Luc Van Gool

Abstract

Unsupervised categorization of objects is a fundamental problem in computer vision. While appearance-based methods have become popular recently, other important cues like functionality are largely neglected. Motivated by psychological studies giving evidence that human demonstration has a facilitative effect on categorization in infancy, we propose an approach for object categorization from depth video streams. To this end, we have developed a method for capturing human motion in real-time. The captured data is then used to temporally segment the depth streams into actions. The set of segmented actions are then categorized in an unsupervised manner, through a novel descriptor for motion capture data that is robust to subject variations. Furthermore, we automatically localize the object that is manipulated within a video segment, and categorize it using the corresponding action. For evaluation, we have recorded a dataset that comprises depth data with registered video sequences for 6 subjects, 13 action classes, and 174 object manipulations.

Images/Videos

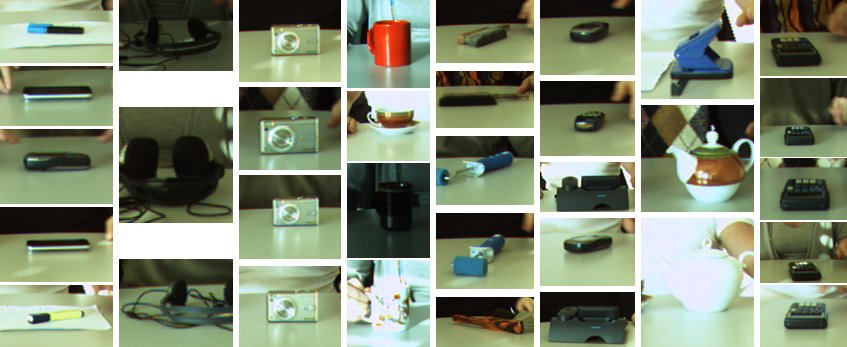

A subset of objects that have been extracted from the dataset and categorized according to the human motion observed during object manipulation. Each column shows a representative set of objects that are in the same class.

Video ~30MB (AVI)

Data

All data is only for research purposes. When using this data, please acknowledge the effort that went into data collection by referencing the corresponding paper. If you have questions concerning the data, please contact Juergen Gall. Annotation of the data and a program for visualization: scripts.tar.gz. Video data (each file is less than 1.2GB):

Publications

Gall J., Fossati A., and van Gool L., Functional Categorization of Objects using Real-time Markerless Motion Capture (PDF), IEEE Conference on Computer Vision and Pattern Recognition (CVPR'11), 1969-1976, 2011. ©IEEE