Incremental Learning of NCM Forests for Large-Scale Image Classification

Marko Ristin, Matthieu Guillaumin, Juergen Gall, and Luc Van Gool

Abstract

In recent years, large image data sets such as ImageNet, TinyImages or ever-growing social networks like Flickr have emerged, posing new challenges to image classification that were not apparent in smaller image sets. In particular, the efficient handling of dynamically growing data sets, where not only the amount of training images, but also the number of classes increases over time, is a relatively unexplored problem. To remedy this, we introduce Nearest Class Mean Forests (NCMF), a variant of Random Forests where the decision nodes are based on nearest class mean (NCM) classification. NCMFs not only outperform conventional random forests, but are also well suited for integrating new classes. To this end, we propose and compare several approaches to incorporate data from new classes, so as to seamlessly extend the previously trained forest instead of re-training them from scratch. In our experiments, we show that NCMFs trained on small data sets with 10 classes can be extended to large data sets with 1000 classes without significant loss of accuracy compared to training from scratch on the full data.

Images

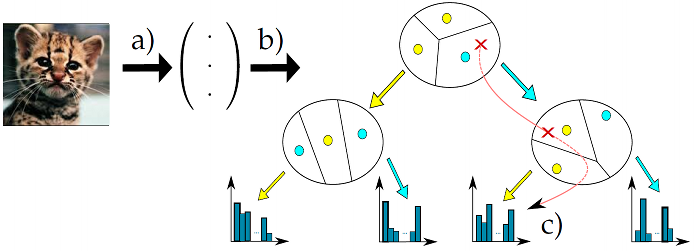

Classification of an image (illustrated by the red cross) by a single tree. (a) The feature vector is extracted, (b) the image is assigned to the closest centroid (colors indicate further direction), (c) the image is assigned the class probability found at the leaf.

Source Code

If you have questions concerning the data, please contact Marko Ristin.

Publications

Ristin M., Guillaumin M., Gall J., and van Gool L., Incremental Learning of Random Forests for Large-Scale Image Classification (PDF), IEEE Transactions on Pattern Analysis and Machine Intelligence, Vol. 38, No. 3, 490 - 503, 2016. ©IEEE

Ristin M., Guillaumin M., Gall J., and van Gool L., Incremental Learning of NCM Forests for Large-Scale Image Classification (PDF), IEEE Conference on Computer Vision and Pattern Recognition (CVPR'14), 3654 - 3661, 2014. ©IEEE