Towards Understanding Action Recognition

Hueihan Jhuang, Juergen Gall, Silvia Zuffi, Cordelia Schmid, and Michael J. Black

Abstract

Although action recognition in videos is widely studied, current methods often fail on real-world datasets. Many recent approaches improve accuracy and robustness to cope with challenging video sequences, but it is often unclear what affects the results most. This paper attempts to provide insights based on a systematic performance evaluation using thoroughly-annotated data of human actions. We annotate human Joints for the HMDB dataset (J-HMDB). This annotation can be used to derive ground truth optical flow and segmentation. We evaluate current methods using this dataset and systematically replace the output of various algorithms with ground truth. This enables us to discover what is important - for example, should we work on improving flow algorithms, estimating human bounding boxes, or enabling pose estimation? In summary, we find that high-level pose features greatly outperform low/mid level features; in particular, pose over time is critical, but current pose estimation algorithms are not yet reliable enough to provide this information. We also find that the accuracy of a top-performing action recognition framework can be greatly increased by refining the underlying low/mid level features; this suggests it is important to improve optical flow and human detection algorithms. Our analysis and J-HMDB dataset should facilitate a deeper understanding of action recognition algorithms.

Images

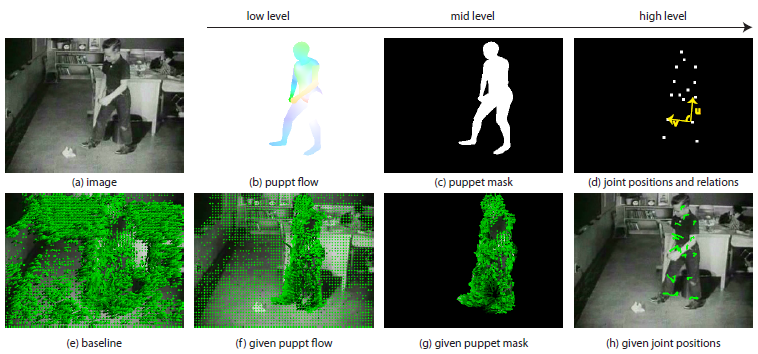

Overview of our annotation and evaluation. (a-d) A video frame annotated by a puppet model. (a) image frame, (b) puppet flow, (c) puppet mask, (d) joint positions and relations. Three types of joint relations are used: 1) distance and 2) orientation of the vector connecting pairs of joints; i.e. the magnitude and the direction of the vector u. 3) Inner angle spanned by two vectors connecting triples of joints; i.e. the angle between the two vectors u and v. (e-h) From left to right, we gradually provide the baseline algorithm (e) with different levels of ground truth from (b) to (d). The trajectories are displayed in green.

Data

If you have questions concerning the data, please contact Hueihan Jhuang.

Publications

Jhuang H., Gall J., Zuffi S., Schmid C., and Black M., Towards Understanding Action Recognition (PDF), International Conference on Computer Vision (ICCV'13), 3192 - 3199, 2013. ©IEEE