Video-based Reconstruction of Animatable Human Characters

Carsten Stoll, Juergen Gall, Edilson de Aguiar, Sebastian Thrun, and Christian Theobalt

Abstract

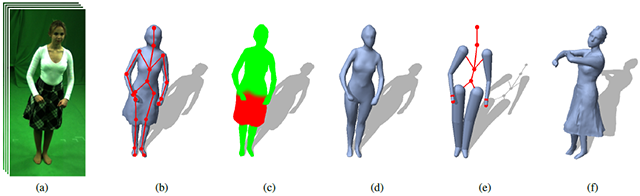

We present a new performance capture approach that incorporates a physically-based cloth model to reconstruct a rigged fully animatable virtual double of a real person in loose apparel from multi-view video recordings. Our algorithm only requires a minimum of manual interaction. Without the use of optical markers in the scene, our algorithm first reconstructs skeleton motion and detailed time-varying surface geometry of a real person from a reference video sequence. These captured reference performance data are then analyzed to automatically identify non-rigidly deforming pieces of apparel on the animated geometry. For each piece of apparel, parameters of a physically-based real-time cloth simulation model are estimated, and surface geometry of occluded body regions is approximated. The reconstructed character model comprises a skeleton-based representation for the actual body parts and a physically-based simulation model for the apparel. In contrast to previous performance capture methods, we can now also create new real-time animations of actors captured in general apparel.

Images/Videos

(a) Multi-view video sequence of a reference performance. (b) Estimated skeleton motion and deforming surface. (c) Cloth segmentation (regions of loose apparel in red). (d) Statistical body model fitted to reference geometry. (e) Estimated collision proxies. (f) After optimal cloth simulation parameters are found, arbitrary new animations can be created.

Publications

Stoll C., Gall J., de Aguiar E., Thrun S., and Theobalt C., Video-based Reconstruction of Animatable Human Characters (PDF), ACM Transactions on Graphics (SIGGRAPH Asia 2010), Vol. 29, No. 6, 2010. ©ACM

Gall J., Stoll C., de Aguiar E., Theobalt C., Rosenhahn B., and Seidel H.-P., Motion Capture Using Joint Skeleton Tracking and Surface Estimation (PDF), IEEE Conference on Computer Vision and Pattern Recognition (CVPR'09), 2009. © IEEE